Nobody likes to wait. In a hospital ward, a few extra seconds can mean the difference between spotting a problem early or missing it altogether. On a factory floor, a slow alert could let a faulty product roll through the line and waste thousands of dollars in materials. Even something as simple as a retail kiosk lagging for two or three seconds is enough to make customers give up.

The common thread running through all of these is simple: if the AI can’t keep up, the decision gets made too late. Once you miss the moment, no amount of cloud power makes up for it.

Why edge makes AI faster

When AI models live in the cloud, every question has to take a round trip across the network before an answer comes back. That journey is fine if you’re checking a dashboard once a day, but it doesn’t cut it when every second matters.

Edge computing skips the trip. By keeping the data close to where it’s created, the model doesn’t waste time moving through distant servers. A vision system on a production line can spot defects before the next item even comes down the belt. A monitoring device in an oil field can raise a flag instantly, even when the nearest data center is hundreds of miles away.

That’s the real power of the edge: speed you can feel, and answers that arrive in the same moment the data does.

What makes good edge hardware for low-latency AI

To deliver real-time results, edge AI hardware needs to check a few boxes:

- Processing power

AI inference eats compute cycles fast. Hardware with the right mix of CPUs, GPUs, or accelerators makes sure models don’t choke under the load. - Memory and throughput

Feeding data into a model without bottlenecks takes plenty of RAM and high-speed storage. Low-latency systems can’t afford to wait on slow reads and writes. - Connectivity where it counts

Whether it’s a factory, a retail floor, or a remote energy site, the hardware has to plug into local sensors, cameras, and networks without hiccups. - Rugged reliability

Edge deployments aren’t sheltered racks in pristine data centers. They’re often dusty, hot, or far from IT staff. The hardware has to stay reliable in less-than-ideal conditions. - Remote visibility

If a device is miles away, teams still need to see what’s happening and manage updates without being on site. Remote management isn’t optional; it’s core to keeping systems alive.

Together, these factors mean the difference between an AI system that reacts instantly and one that lags behind reality.

Where low-latency AI matters most

Not every workload demands instant answers, but for some, even a short delay can be costly. A few areas where shaving milliseconds really pays off:

- Manufacturing lines

Vision systems spot defects or safety issues in real time. A delay of even a second can let bad products slip through or create hazards for workers. - Healthcare monitoring

Patient wearables and imaging devices need to flag anomalies immediately, not after data has traveled to and from a distant server. Fast alerts help staff act quickly when every moment matters. - Retail checkout

Cameras and sensors can speed up self-checkouts or reduce fraud by catching missed scans as they happen. Slow responses frustrate customers and undermine trust. - Autonomous systems

Robots in warehouses or drones in the field can’t wait on the cloud to make decisions about navigation or obstacle avoidance. The response has to be local and near-instant. - Smart energy grids

When demand spikes or faults appear, the grid has to rebalance in real time. Low-latency AI at the edge keeps the lights on and the system stable. - City governments

Analyzing camera feeds for public safety, coordinating emergency response, or supporting defense operations in the field.

In each case, the value is safer environments, smoother operations, and better customer experiences.

How to choose the right edge system

Selecting hardware for low-latency AI processing comes down to understanding the job it has to do and the environment it has to survive in. A factory floor that runs around the clock in harsh conditions needs a rugged and reliable system that can take the punishment. A retail store with dozens of cameras benefits more from compact devices that fit into tight spaces without creating heat or noise.

Connectivity is another factor. Some deployments sit in locations where network access is unreliable or expensive, which means the system must be able to work independently for long periods. Others demand strong links to the cloud for updates, coordination, or backup. In every case, IT teams need the ability to manage these systems remotely so they aren’t sending engineers out every time something changes.

The right edge system balances performance, durability, and manageability in a way that matches the workload and the setting. When those elements align, edge AI can move from trial runs into dependable, day-to-day use.

Real-world applications of low-latency edge AI

Low-latency edge AI is already making its mark across different industries. In healthcare, systems near the point of care can process medical imaging on the spot, giving clinicians faster insights without waiting on remote servers. In manufacturing, AI models at the edge detect defects on the production line in real time, helping operators intervene before faulty goods move further down the chain.

The same applies in transportation, where vehicles and infrastructure rely on instant decision-making. Whether it’s traffic management that adapts to live conditions or autonomous systems that need to react in milliseconds, edge AI ensures responses keep pace with reality. Even in retail, edge devices process video and sensor data within the store to track stock levels, monitor foot traffic, and improve customer service without lag.

What ties these examples together is simple: decisions happen where the data originates, and that speed translates directly into better outcomes; safer patients, smoother production, and smarter services.

To find the right solution for AI processing, contact us here.

FAQs about low-latency AI at the edge

Who needs low-latency AI processing?

Any organization where decisions need to happen in real time benefits from low-latency AI. Manufacturers rely on it for quality control, utilities for monitoring grid performance, retailers for customer experience, and logistics companies for route optimization. If waiting even a second could impact safety, efficiency, or revenue, low-latency AI belongs in the picture.

What’s the difference between cloud AI and edge AI for latency?

Cloud AI works well for training large models or crunching data that doesn’t need instant answers. The challenge is distance. Sending data back and forth adds delay. Edge AI cuts out the trip by processing information where it’s collected, so results appear almost instantly.

Does edge AI save money as well as time?

Yes. By analyzing data locally, companies avoid pushing massive volumes of video, sensor logs, or transactions to the cloud. That reduces bandwidth costs, lowers cloud compute bills, and makes the whole system more efficient.

What kind of hardware do I need for low-latency AI?

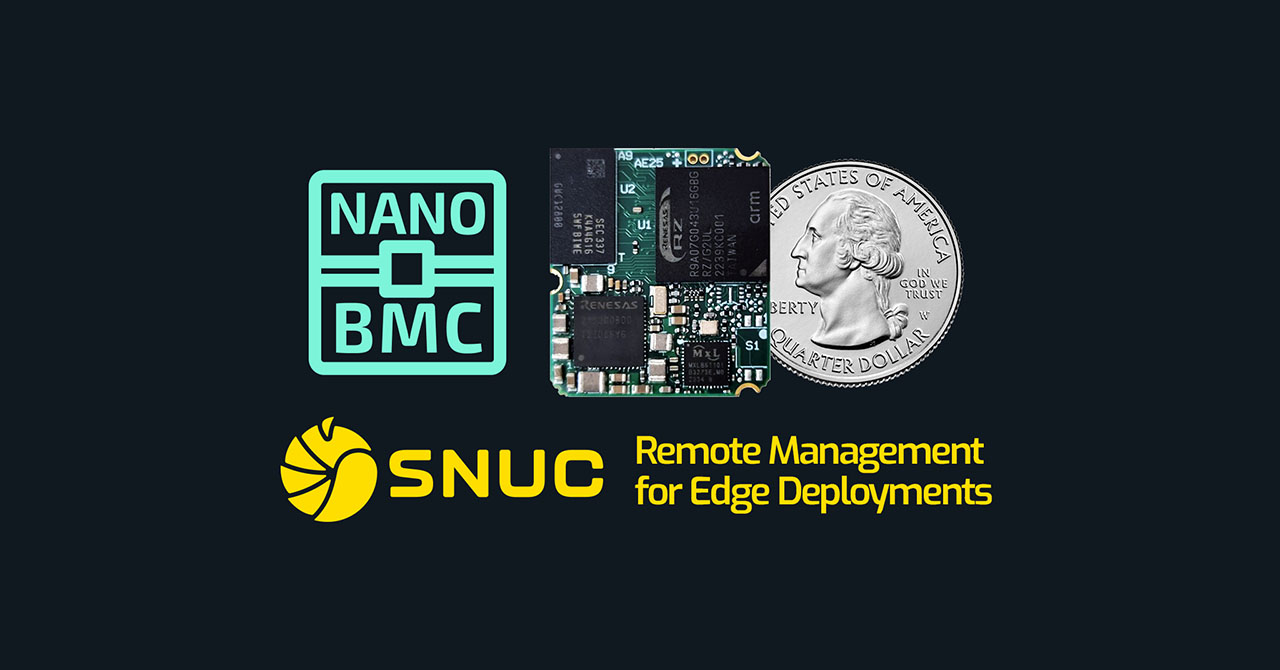

The best setup depends on the environment. Compact systems with GPUs work well in offices, retail sites, or smaller industrial spaces. Ruggedized servers handle harsher conditions, like remote facilities or outdoor deployments. SNUC’s Cyber Canyon, Onyx, and extremeEdge servers are examples of hardware designed with these needs in mind.

Is low-latency AI hard to manage across multiple sites?

Not with the right tools. Modern edge servers come with built-in remote management, so IT teams can monitor, update, and troubleshoot devices from a central location. That means less time traveling between sites and more time focusing on performance.